HDFS output background and definition

In the era of information explosion, the data that people can obtain has increased exponentially, and the capacity of a single machine is often unable to store a large amount of data. Simply increasing the number of hard disks to expand the storage capacity of a computer file system has significant shortcomings in terms of capacity size, capacity growth rate, data backup, and data security. However, it is inconvenient to manage and maintain uniformly if the data is allocated to more hard disks managed by the operating system.

Thus, the distributed file system was born, which is a system that contains files on multiple hosts. Hadoop, as the basic framework of distributed systems for big data technology, is one of its core components is HDFS.

HDFS (Hadoop Distributed File System): a file system used to store files and locate files through a directory tree; secondly, it is distributed, and many servers are combined to achieve its functions. HDFS is suitable for writing once and reading multiple times. Often used when a file does not need to be changed after being created, written, and closed.

And when I was studying the scheduling method of YARN, I also discovered the speed advantage of the distributed storage system. Anyway, I will explain the specific reasons in future study notes.

Advantages and disadvantages of HDFS

Advantages

- High fault tolerance

When HDFS stores data, it will automatically save copies in different nodes, and when a node's data is lost, it can automatically restore data. - Suitable for processing data with large file size or large data size.

- It can be deployed on low-cost hardware and improves stability through a multi-copy mechanism.

Disadvantages

- Not suitable for low-latency data access, such as millisecond-level storage data. Real-time data storage requires components such as Kafka. Of course, this is another story.

Inability to efficiently store large numbers of small files

- If storing a large number of small files, it will take up a lot of memory on the NameNode to store the file directory and block information. This is not advisable cause the memory of the NameNode is always limited;

- The seek time for small file storage exceeds the read time, which violates the design goals of HDFS.

- Concurrent writing and random file modification are not supported, which means it does not allow multi-threaded writes, and only supports data appending instead of random modification of the file.

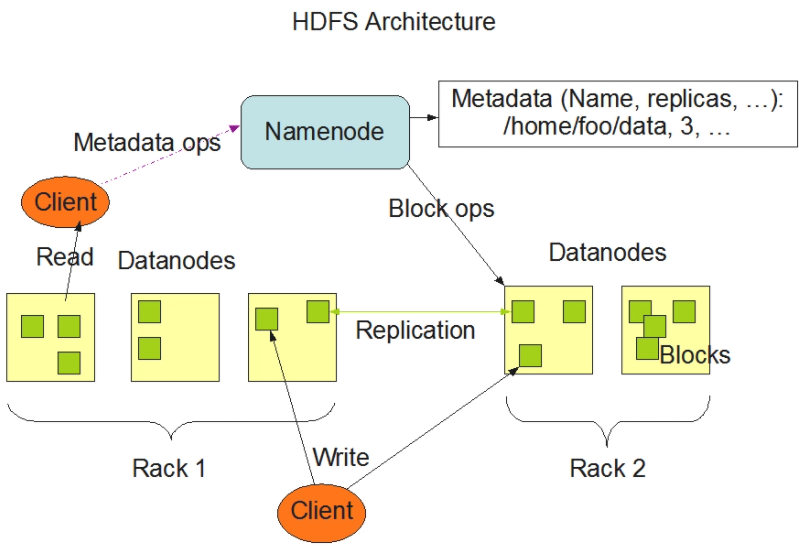

Architecture and principle of HDFS

Namenode(nn): master; the supervisor and manager of the system.

- Manage namespaces in HDFS

- Manage copy strategy

- Manage mapping information for blocks

- Handling client read and write requests

Datanode: slave; executor of the command.

- Store the actual client

- Perform read/write operations of data blocks

Client

- File segmentation. When uploading a file to HDFS, the client divides the file into blocks one by one and then uploads it.

- Interact with nn to get file location information

- Interact with DataNode to read or write data

- Provides some commands to manage HDFS

- Access HDFS through some commands, such as adding, deleting, checking and modifying HDFS.

Secondary NameNode(2nn): It should be noted that 2nn is not the backup node of nn. When nn has been killed, it cannot replace it and provide services immediately. If compare nn to a boss, 2nn is more like a secretary.

- Assist nn to share its workload, such as periodically merging Fsimage and Edits and pushing them to nn.

- In an emergency, it can assist in restoring the nn.

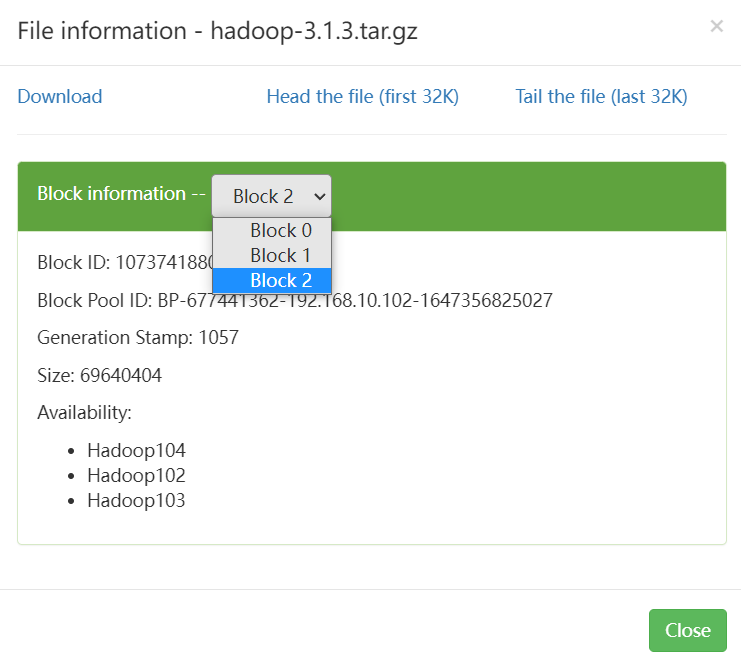

Data Block in HDFS

Files in HDFS are broken into block-sized chunks called data blocks. These blocks are stored as independent units. The default size of these HDFS data blocks is 128 MB in Hadoop2.x/3.x and 64M in 1.x.We can configure the block size as per our requirement by changing the dfs.block.size property in hdfs-site.xml.

Hadoop distributes these blocks on different slave machines, and the master machine stores the metadata about the blocks location.

Example:

When I upload a 322M file, the file can be found on port 9870. Checking the block information of this file, I find that the first block and the second block are both 134,217,728 bytes (128M), which is the default block size, while the size of the third block is 69,640,404 bytes (about 66M). The sum of these three block sizes is the size of the file.

Summary

The easiest way to operate HDFS is to use Shell commands inside the cluster. The APACHE Hadoop official website has a detailed command guide.

If you need to access the remote cluster in the Windows environment (office environment), HDFS API is a good solution. I usually write API operation code through Maven because Maven can easily download dependent jar packages, and I strongly recommend that all beginners use Maven to build projects.

2 comments

价值导向积极,彰显社会责任意识。

文章的叙述风格独特,用词精准,让人回味无穷。